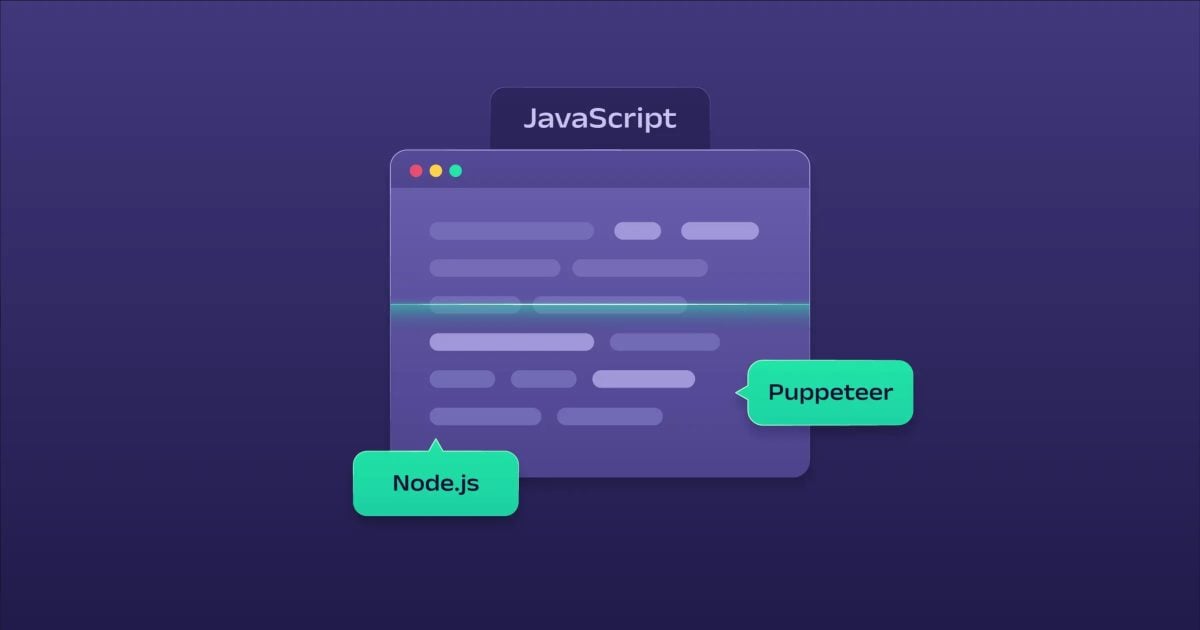

the scraping in JavaScript is an effective way to collect web data, whether it's static or dynamic content. This guide explains everything.

Prerequisites for web scraping in JavaScript

Before coding, you must prepare the environment. To do this, you need two basic tools:

- ✅ Node.js : it is a JavaScript execution environment, open-source and cross-platform. It allows you to run JS code outside of a browser.

- ✅ npm (Node Package Manager): This is a package manager integrated into Node.js, useful for quickly installing libraries.

For libraries, here are the most popular:

- ✅ puppeteer and Playwright for scraping dynamic sites. They simulate a real browser and load pages just like a user.

- ✅ Cheerio for static scraping. It is lightweight, fast, and easy to use.

Web scraping in JavaScript

Let's get down to business with this short tutorial.

Step 1: Installation and configuration

- Download Node.js, then install it. Verify the installation with the terminal:

node -v

npm -v- Create a Node.js project in your terminal.

mkdir my-scraping

cd my-scraping

npm init -yThis creates a Node.js project with a package.json file.

- Install the necessary libraries

👉 For a static page (Cheerio)

npm install axios cheerio👉 For a dynamic page (Puppeteer)

npm install puppeteerStep 2: Creating a scraping script

- Scraping a static page with Cheerio

// Import libraries

const axios = require('axios');

const cheerio = require('cheerio');

// Scraper page URL

const url = "https://exemple.com";

// Main function

async function scrapePage() {

try {

// Download HTML content

const { data } = await axios.get(url);

// Load HTML with Cheerio

const $ = cheerio.load(data);

// Example: retrieve all h1 titles

const titles = [];

$("h1").each((i, elem) => {

titles.push($(elem).text().trim());

});

// Display results

console.log("Titles found:", titles);

} catch (error) {

console.error("Error while scraping:", error);

}

}

// Run script

scrapePage();

👉 You can replace https://exemple.com by the URL of the page you want to scrape and modify the selector $("h1") to target what you're interested in (eg. $("p"), .class, #idetc.).

- Scraping a dynamic page with Puppeteer

// Import Puppeteer

const puppeteer = require("puppeteer");

// Scrape page URL

const url = "https://exemple.com";

async function scrapePage() {

// Launch a headless browser

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

try {

// Go to page

await page.goto(url, { waitUntil: "networkidle2" });

// Example: extract text from all h1 titles

const titles = await page.$$eval("h1", elements =>

elements.map(el => el.textContent.trim())

);

console.log("Titles found:", titles);

} catch (error) {

console.error("Error while scraping:", error);

} finally {

// Close browser

await browser.close();

}

}

// Run script

scrapePage();

Step 3: Managing extracted data

Recovered data can be saved in CSV format for Excel, or stored in JSON format for database integration.

// Save as JSON

fs.writeFileSync("results.json", JSON.stringify(results, null, 2), "utf-8");

console.log("✅ Data saved in resultats.json");

// Save as CSV

const parser = new Parser();

const csv = parser.parse(results);

fs.writeFileSync("results.csv", csv, "utf-8");

console.log("✅ Data saved in resultats.csv");

} catch (error) {

console.error("❌ Error while scraping:", error);

}

}

scrapeAndSave();

Best practices for web scraping in JavaScript

Before running your scripts, it is essential to adopt certain best practices to ensure that your scraping remains effective.

- 🔥 Respect the robots.txt file : this is the golden rule for avoiding legal and ethical problems.

- 🔥 CAPTCHA and blocking management with proxies or anti-CAPTCHA services.

- 🔥 Make your script more robust Add error and exception handling to avoid crashes.

What alternatives should you consider?

Web scraping is not limited to JavaScript. There are several other options available to you, such as:

- Python Scrapy and BeautifulSoup work wonders for efficient data recovery.

- PHP ideal for web developers who want to integrate scraping directly into their projects.

- Web scraping tools as Bright Data, Octoparse and Apify. They're perfect if you don't want to code, but still want total control over your data.

FAQs

How do I scrape a site with JavaScript?

To scrape a site with JavaScript, you need to follow a few key steps:

- Identify if the page is static Where dynamic.

- For a static page, use Cheerio to extract HTML directly.

- For a dynamic page, use Puppeteer or Playwright to simulate a browser, wait for the content to load completely, then extract data.

- Structuring and saving data in a usable format such as JSON Where CSV.

What's the best JavaScript web scraping tool?

It all depends on the type of site:

- 🔥 Cheerio fast and lightweight, perfect for static pages.

- 🔥 puppeteer ideal for simulating a real browser and managing dynamic content.

- 🔥 Playwright similar to Puppeteer, but with more advanced, cross-browser features.

What's the best programming language for scraping?

There is no single best programming language for scraping. The choice will depend on your project and your environment.

- 🔥 Python for fast-track projects.

- 🔥 JavaScript if you already work in the web ecosystem.

- 🔥 PHP to integrate scraping directly into an existing website.

- 🔥 Code-free tools such as Bright Data, Octoparse and Apify.

To conclude, the web scraping in JavaScript simplifies data collection, whether with Cheerio, Puppeteer or Playwright. What about you? What techniques do you use?

💬 Share your experiences or ask your questions in comments!