An exclusive IFOP poll for Alucare.fr.

Similar item:

Deepfake definition:

Deepfake is an artificial intelligence (AI)-based image synthesis technique that makes it possible to create or modify videos and audio recordings in a highly realistic way. This technology uses deep learning methods, notably generative adversarial networks (GANs), to superimpose and combine images and sounds. Deepfakes are often used to produce content in which people, typically celebrities or public figures, appear to say or do things they have never actually said or done.

Although deepfakes can have legitimate applications in entertainment or content creation (for example Candy.ai to create his virtual girlfriend), they pose major ethical and legal problems due to their potential for misinformation, manipulation and invasion of privacy (such as this application for undressing a person). The ability to create false but persuasive content increases the risk of deception and has serious implications for trust in the media and information (with examples such as the " Deepnude" )

With the breathtaking progress of artificial intelligence, the world is now confronted on a daily basis with deepfakes, those strikingly realistic fictitious images and videos that can be used for entertainment purposes, but also for large-scale disinformation and denigration.

While many public figures are regularly targeted by the misappropriation of their image, anonymous people are not immune, as shown recently in Spain by the case of very young girls who fell victim to sexual photo montages circulated on social networks.

Are the French aware of this phenomenon? Do they feel capable of dealing with it? Do they fear it for themselves and for democracy?

To measure it, Alucare commissioned IFOP to survey more than 2,000 people. about the upheaval at work in the way we perceive and will perceive reality in the future. Their answers reflect the uncertainty into which they are being plunged by these formidable technological advances, their desire for clear identification of the content concerned, and their fears of being confronted by them personally or during the next presidential election.

Distinguishing truth from falsehood: a problem for two-thirds of French people

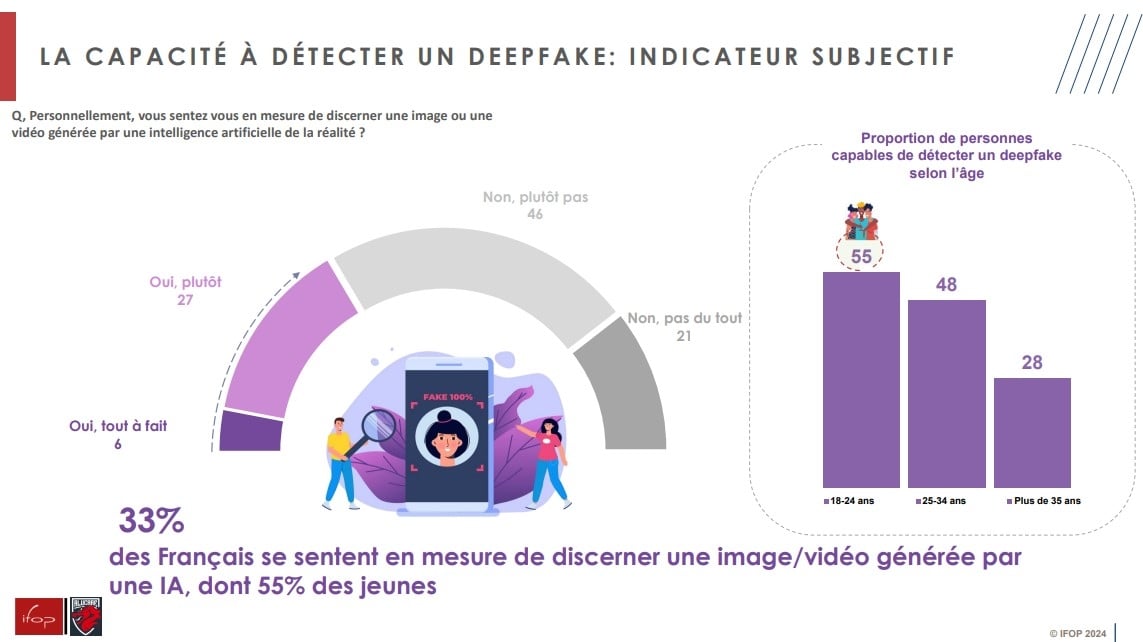

Whether they're not really familiar with the prowess of artificial intelligence or, on the contrary, know just how powerful it can be, only a third (33%) of French people feel able to detect an AI-generated image or video. Only 6% are sure of this, demonstrating the uncertainty that reigns in the population on the subject. Generally more at ease with emerging technologies than their elders, young people are the ones who claim to be the most capable of spotting a deepfake: over half (55%) of 18-24 year-olds say they are, compared with 28% of over-35s and 12% of over-65s. Men are also far more confident than women in their powers of observation, with 40% of them believing themselves capable of spotting such images, compared with 28% of women..

Test: some images are more deceptive than others

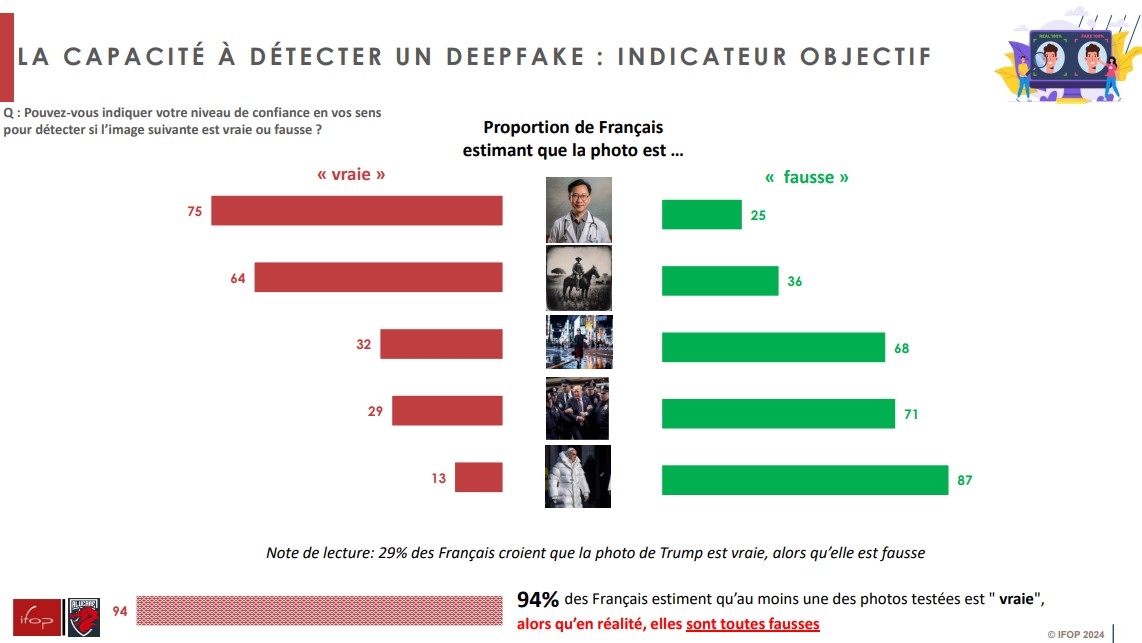

The test proposed by IFOP perfectly illustrates the difficulty of discerning an image produced by artificial intelligence from a photo captured in real life. Invited to examine five images, participants were asked to indicate which they thought had or had not been generated by AI. But in reality, all the images presented were "fake".

The particularly realistic portrait of a doctor fooled three quarters (75%) of respondents.Almost two-thirds (64%) believed an old photo of a man on horseback, typical of shots taken at the beginning of the last century, to be true, but entirely composed by AI. On the other hand, only 32% believed in the veracity of an image of a woman walking in the streets of Tokyo, an image widely used by Open AI to announce the release of its Sora video generator. Regularly in the headlines for his run-ins with the American justice system, former President Donald Trump was shown closely surrounded by several police officers. This "photo" was deemed credible by more than one in four French people (29%), with slightly more women (32%) believing in its authenticity than men (27%). Finally, the image of Pope Francis in a white down jacket, widely published on social networks a few months ago, fooled only 13% of those questioned. Finally, 94% of respondents believed in the veracity of at least one of the clichés submitted to their sagacity.

90% of the French for a mention of origin

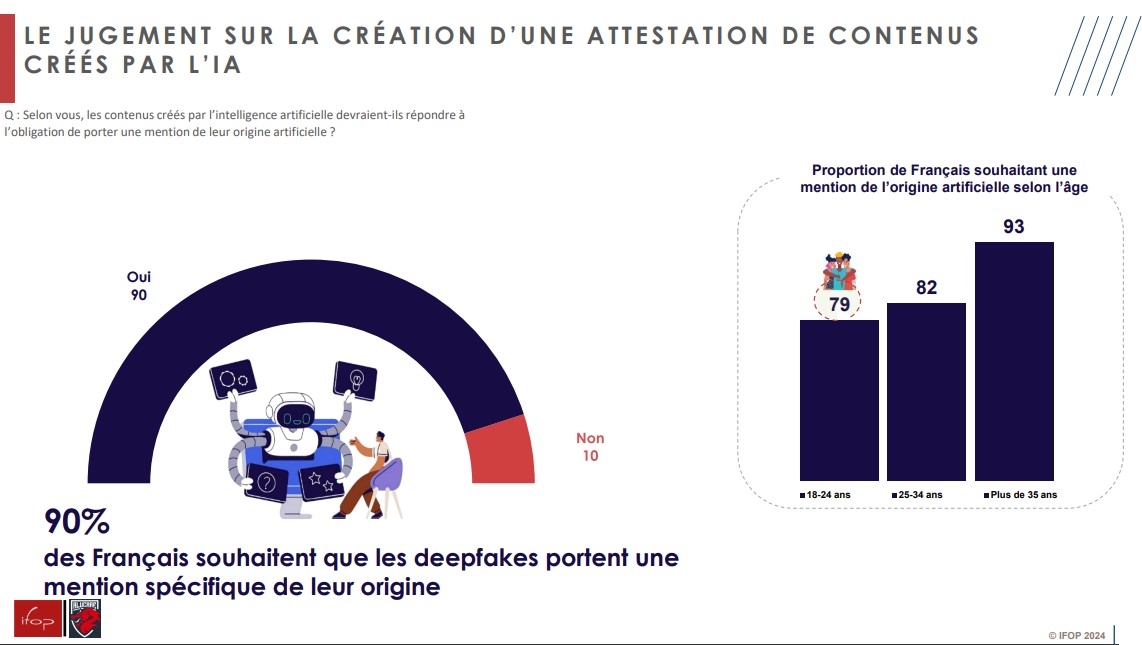

Obviously warned of the risks of misinformation inherent in the propagation of totally realistic images produced by AI, the overwhelming majority (90%) of French people are in favor of a label to identify deepfakes as having been artificially created. This expectation is higher among the over-35s (93% are in favor) than among their younger counterparts (79% among 18-24 year-olds).

Clues not always easy to discern

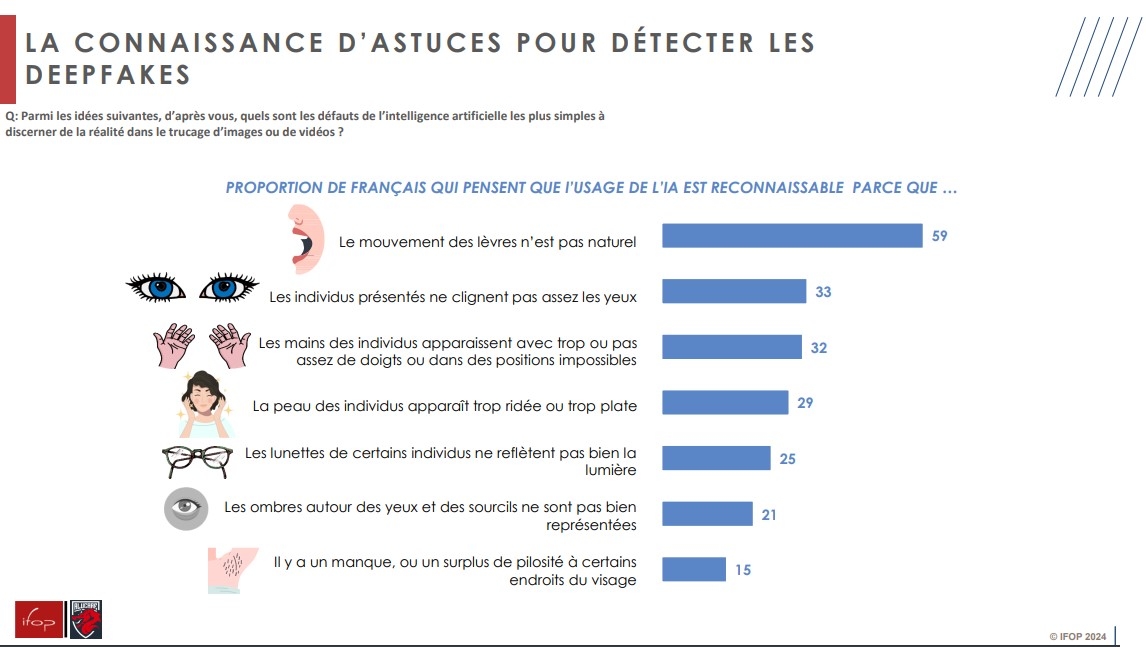

In the space of a few years, or even a few months for some, artificial intelligence tools have made impressive progress in making their visual productions ever more realistic. However, some details still betray their true nature, even if the French remain circumspect about many of them. For 59% of French people, the unnatural movement of the lips of a character speaking in a video is a clue that they find easy to spot.. Similarly, the fact that the individuals pictured don't blink often enough is a good indicator for a third (33%) of the French.

In a similar proportion, 32% point to the difficulties, proven but less and less topical, of image-generating AIs in correctly reproducing hands. Wrinkled or too flat skin (29%), glasses that don't reflect light correctly (25%), shadows around the eyes that aren't precise enough (21%) or a lack of hair in certain areas of the face (15%) are all imperfections seen as potential warning signs. Nevertheless, only a minority of respondents consider them easy to spot.

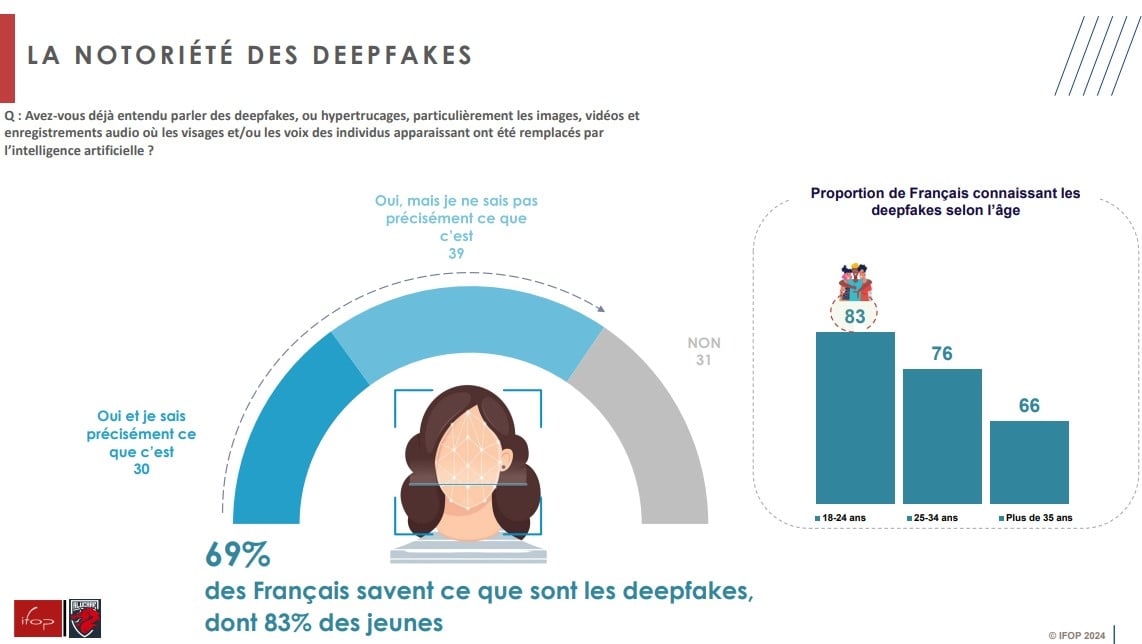

Partial knowledge of deepfakes

From singer Taylor Swift, who fell victim to pornographic deepfakes earlier this year, to President Volodimir Zelensky's false announcement of the surrender of Ukrainian troops, to Ursula von der Leyen's very recent, also fake, statement on the forthcoming European elections, AI-generated videos featuring real people are multiplying. And it's not just celebrities and politicians who are affected: last September, in Almendralejo, Spain, some thirty girls aged 11 to 17 were the target of deepfakes depicting them in the nude.

Faced with this growing and worrying phenomenon, almost 7 out of 10 French people (69%) say they have already heard of deepfakes, but only 30% know exactly what they are. Once again, it's the younger generations who are most aware of this movement: 83% of 18-24 year-olds (vs. 66% of over-35s) are aware of it, and 50% (vs. 15% of over-65s) have a clear vision of it.. The gap between women and men is not insignificant: while 24% of the former clearly know what deepfakes are, 37% of the latter do.

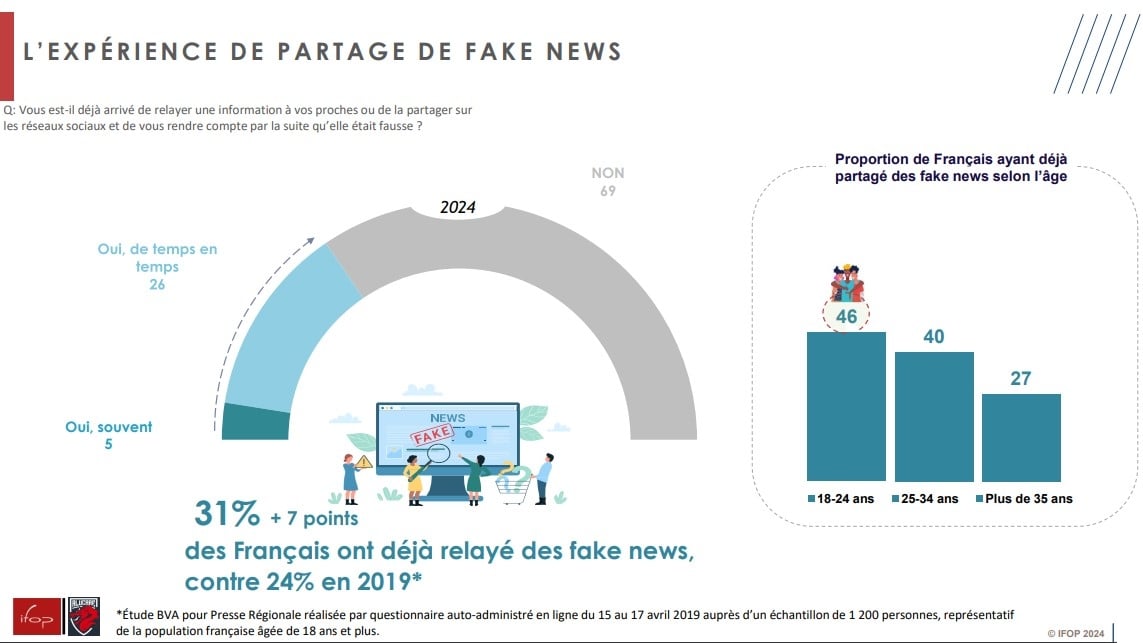

One in three French people has already spread fake news

Deepfakes and fake news belong to the same family that feeds disinformation and manipulation of public opinion. Manipulation in which some French people admit to participating, even unwittingly. For example, 31% of those surveyed (7 points more than in a 2019 BVA study) have already relayed information to those around them that was later proven to be false.Young people (46%) are more likely to have done so than other age groups. In contrast, over three-quarters of the over-50s say they have never done so.

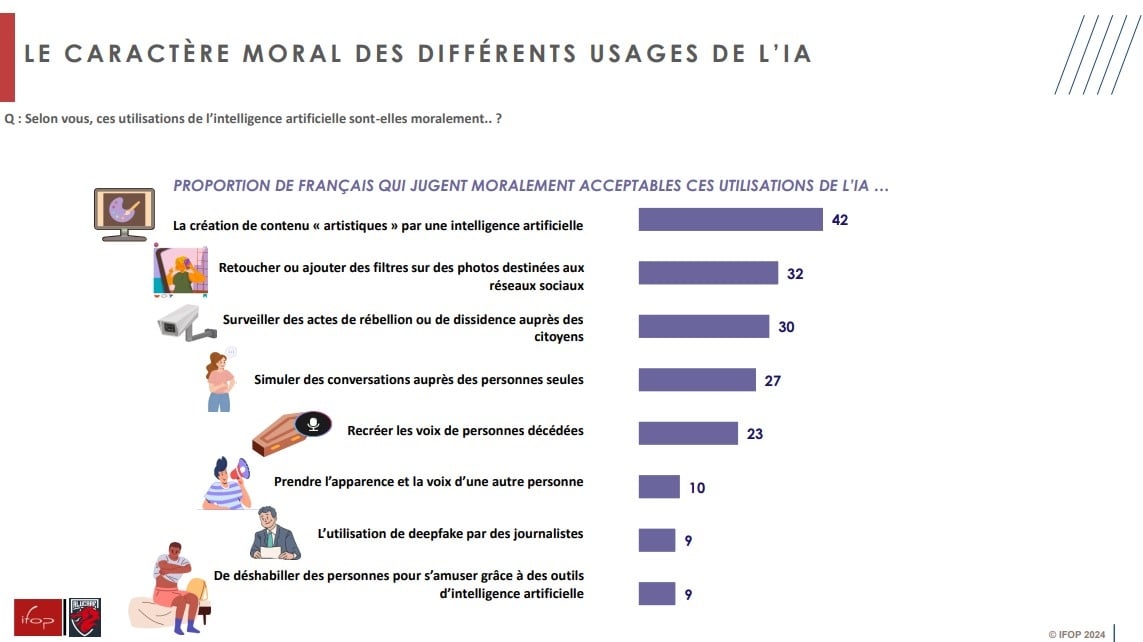

A sometimes dubious moral acceptance

As we've seen, artificial intelligence today provides a wealth of resources in the fields of image, video and voice cloning. Among the various uses of AI presented to them in this survey, none is deemed morally acceptable by the majority of French people. The best accepted are, respectively, the creation of "artistic" content by artificial intelligence (42% consider this morally acceptable) and the retouching of photos on social networks using filters (32%).

More surprising - and certainly more worrying - is the fact that 30% of respondents agree that AI should be used to monitor acts of rebellion or dissent by citizens. In this case, young people are less critical than their elders: 34% of the under-35s find the practice acceptable, compared with 29% of the over-35s. Important generational and gender differences also exist on the issue of undressing people with AI for fun. While only 9% of French people as a whole are not shocked by such use, the figure rises to 17% among the under-35s (and up to 26% of men in this age bracket) versus 6% among their elders. Similarly, 13% of men consider this artificial exposure to be morally acceptable, compared with 4% of women.

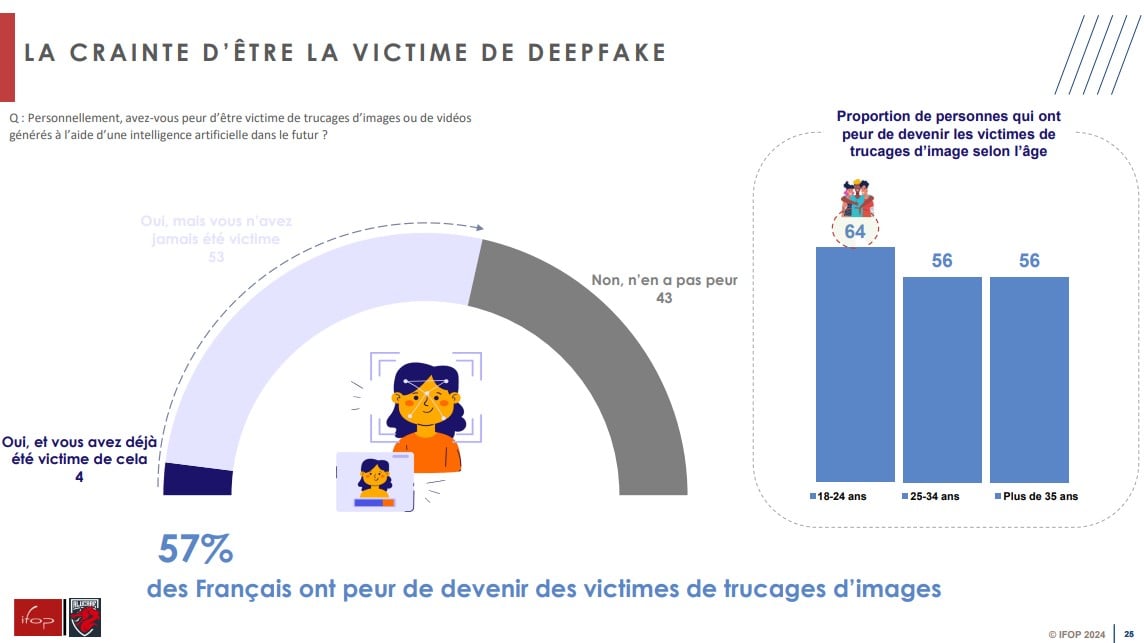

13% of under-25s have fallen victim to deepfakes

Aware of the widespread availability of tools that make it easy to create deepfakes, more than half of respondents (57%) expressed the fear of becoming a victim themselves, as had already been the case for 4% of them and 13% of the under 25s. While younger people are indeed the most worried (64% of 18-24 year-olds fear false images concerning them), other age groups aren't exactly more serene, with 56% of over-25s also expressing concern.

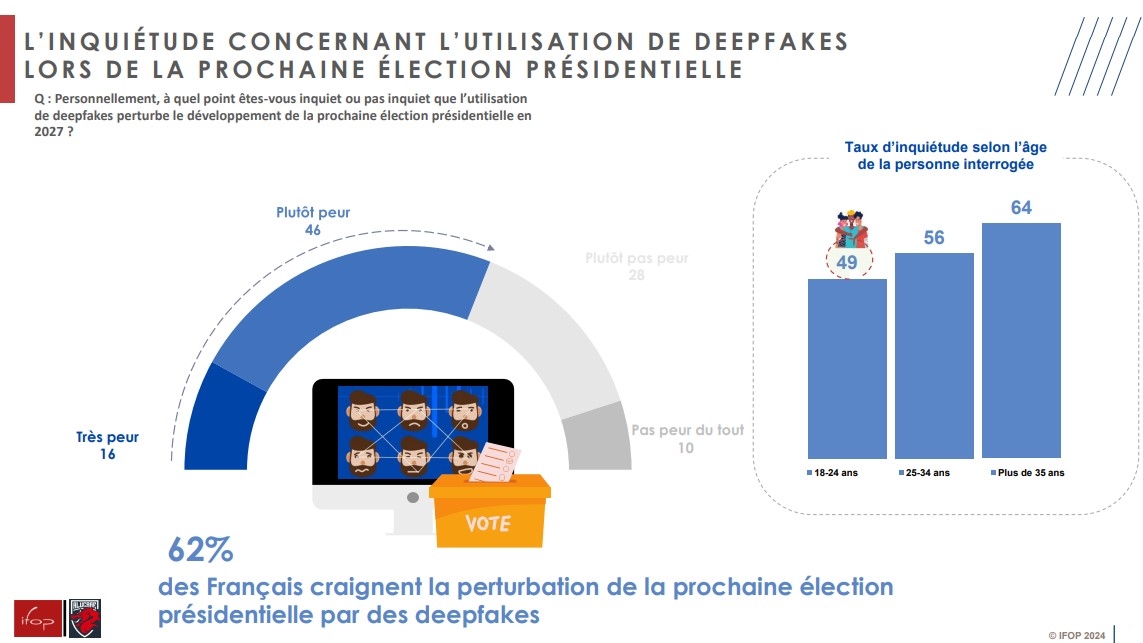

A threat to the presidential election?

If they fear them for themselves, the French are also apprehensive that such practices could interfere with the next presidential election, especially as by this deadline, artificial intelligence tools are certain to be even more powerful. More than 6 out of 10 French people (62%) say they are worried that deepfakes could disrupt the vote in 2027, including 16% who say they are very afraid. More concerned than the average when it comes to the misuse of their own image, young people are much less concerned about the potential impact of false images during election campaigns: less than half (49%) of under-25s are afraid of this, while 70% of over-65s fear it.

Study carried out by IFOP for Alucare.fr from March 5 to 8, 2024 by self-administered questionnaire among a sample of 2,191 people, representative of the French population aged 18 and over, including 551 young people under 35.

Sidebar

Deepfakes in the SREN bill

On Tuesday March 26, a joint committee of elected representatives from the French National Assembly and Senate will study the bill to secure and regulate digital space (SREN). Tabled by the government and adopted by the deputies on October 17, 2023, it aims to secure the risks associated with everyday use of the Internet for individuals and businesses. It includes provisions in a wide range of areas, such as the online protection of minors, the protection of citizens in the digital environment, respect for competition in the data economy and the strengthening of digital regulation. During its autumn session, the Senate added two articles penalizing the publication of deepfakes without consent and the publication of sexual deepfakes.. Penalties could include up to two years' imprisonment and a €60,000 fine.